META.ID-s 3 # 2007

Workshop, Interactive installation, Performance, 3D game engines

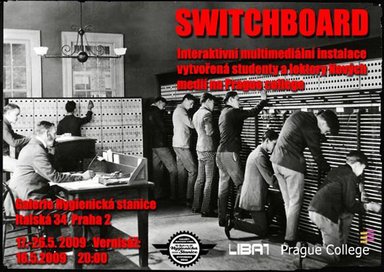

The purpose of META.ID-s III was about to develop a 5 days in-situ workshop at PragueCollege (Prague - CZ).That workshop produced by LIBAT - Hybrid Laboratory For Arts and New Technologies, proposed a collaborative, sensible and inter-media experience, inviting artists from Slovenia, France or Czech Republic, students and technological experts to work in a collaborative environment.

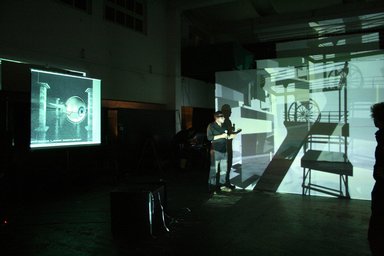

Interested in the use of new Media and new technologies in Arts and Design, we presented technological principles like motion capture, MIDI protocols and sensors. We discussed and then created a hybrid environment, inter-connecting concepts, un-linear story-telling, aesthetics and technical know-how, proposing in between performance and installation, crossing Media, time and space, a contemporary interactive and navigable mixed architecture open to audience.

The main goal of the workshop was about to share and exchange the visions and know-how of the different participants. They explored different types of contemporary media, techniques and languages like performance, video and soundscape creation, interactive 3D environments creation and un-linear storytelling, augmenting bodies by using different sensors' types, MIDI controllers, video tracking techniques and programming systems, to propose an inter-media scenography based on dynamic navigation principles into a narrative body constituted of micro-stories as well as micro-scenes, created especially for that event by the students of the college.

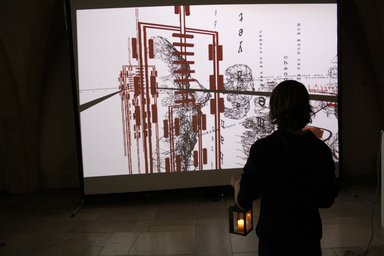

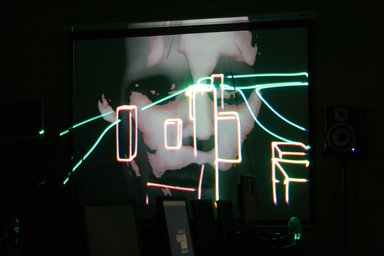

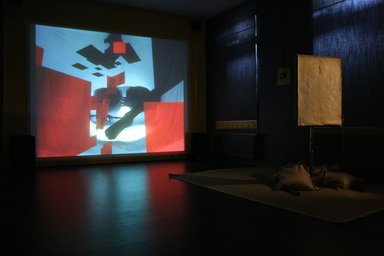

The META.ID-s platform aims to propose this time, a multimedia environment as well as a hybrid scenography, navigable as an installation, where media, performers and public are interacting with each other to compose a modular audio-visual architecture based on main significant key frames like net work communication and data streaming.

The narratives issues of that installation will stay open as an invitation to travel into storytelling networks and layers more than a linear composition, to propose atypical cognitive experiences and systems to hybrid real and digital space.

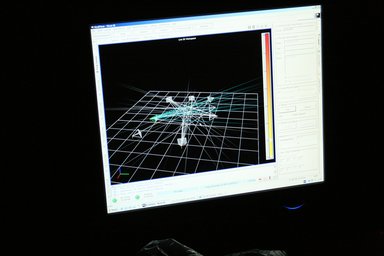

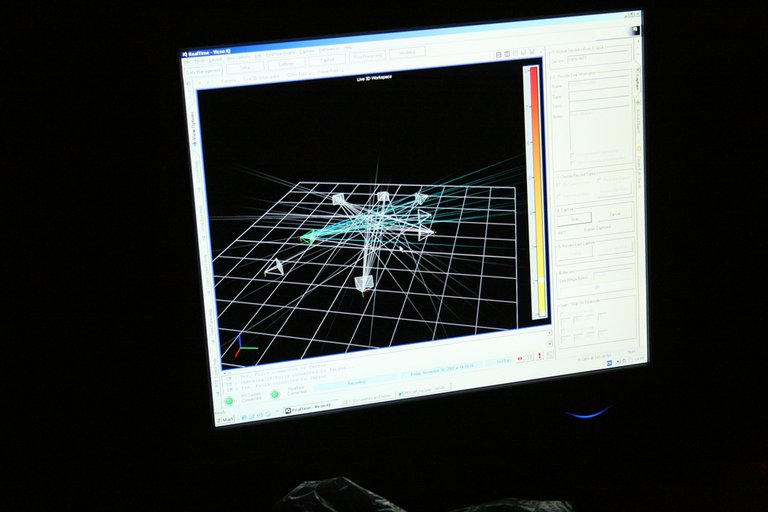

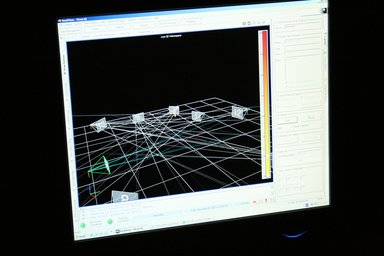

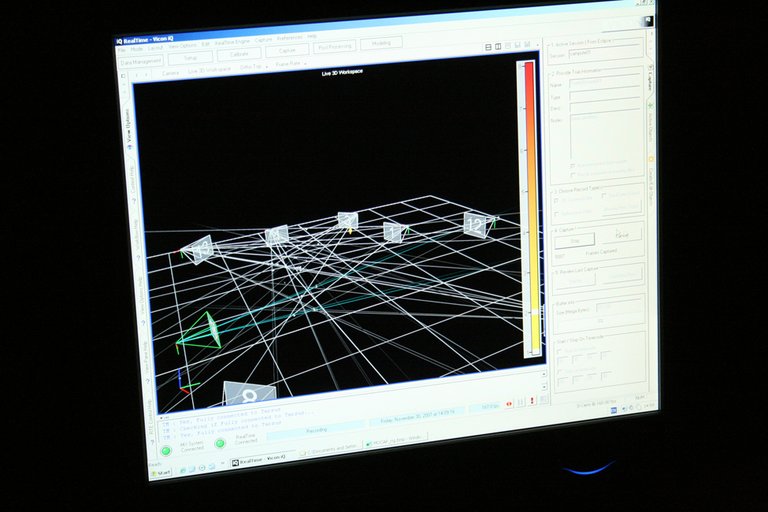

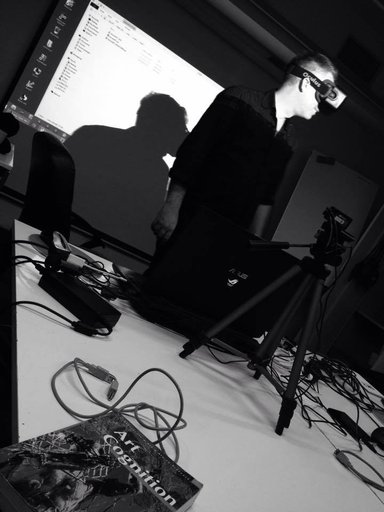

Jan Burianek, who worked in Visual Connection, presented the "Vicon" optical motion Capture system. That really advanced system is using 8 high frame rate, infrared, synchronized cameras to track special spheres, enabling the capture of a full body or any kind of objects equipped. That system is able to record the position of each sphere every 2000 times per second in the real space regarding triangulation principles. Those data can be then transferred to 3D software to animate for example 3D realistic characters or virtual 3D environments. We made test with students, recording their own behavior, expecting later to animate their own custom 3D characters.

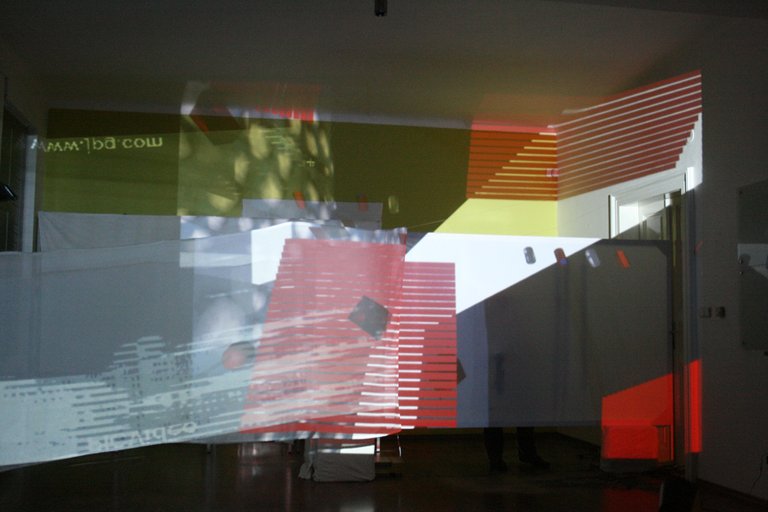

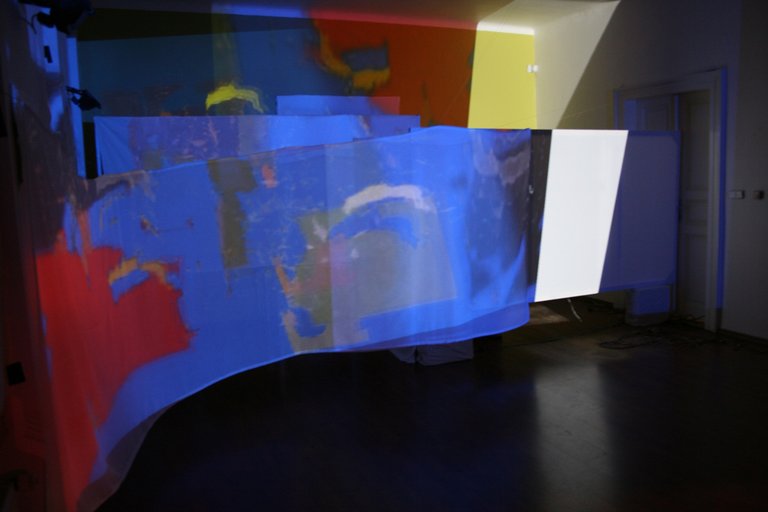

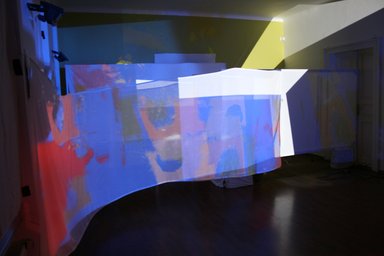

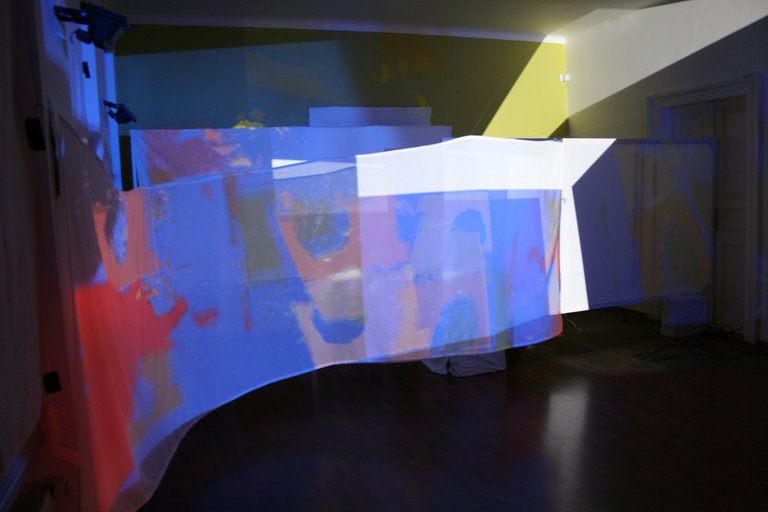

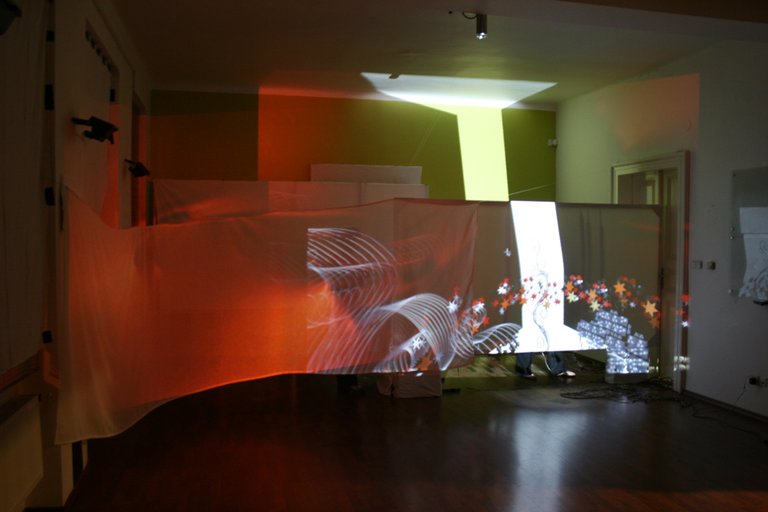

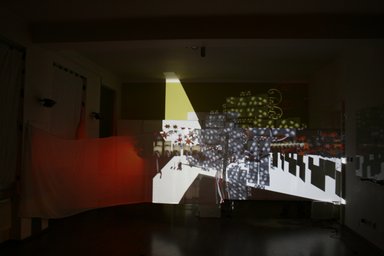

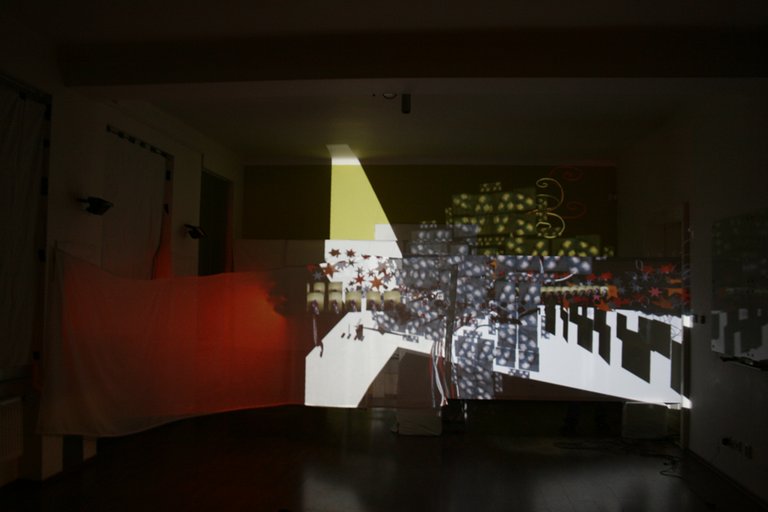

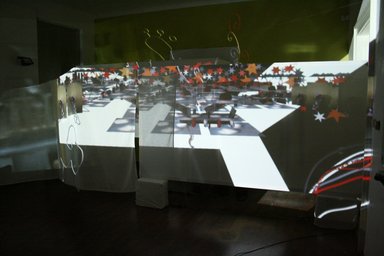

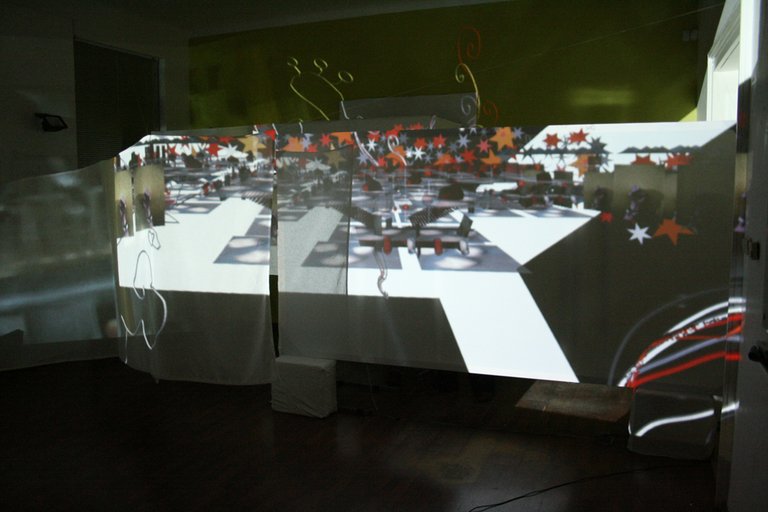

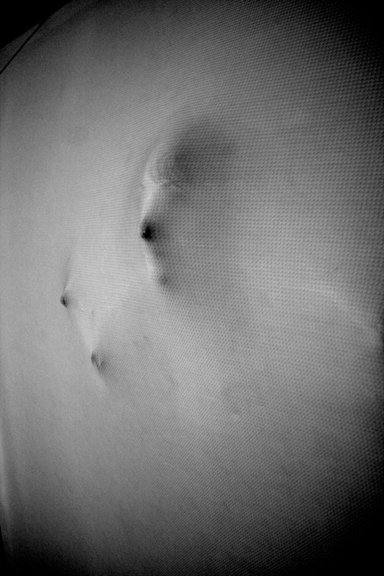

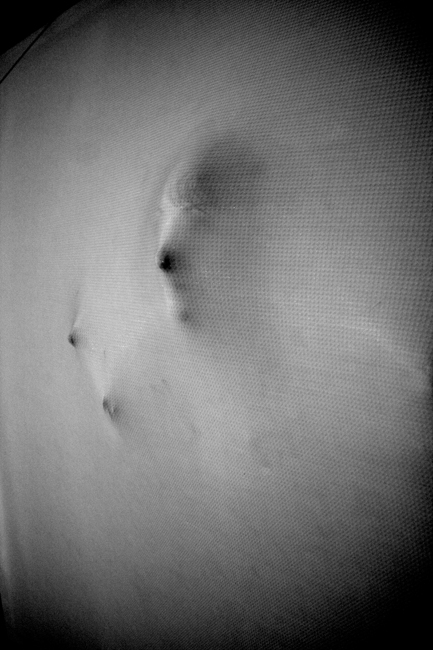

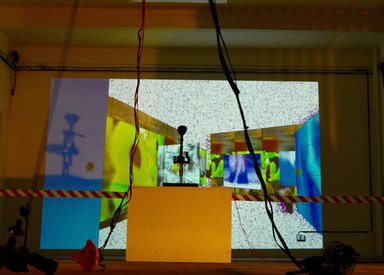

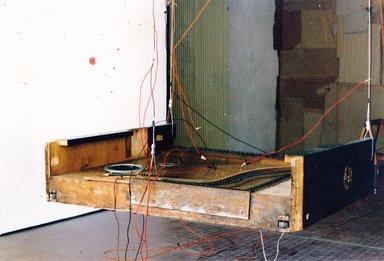

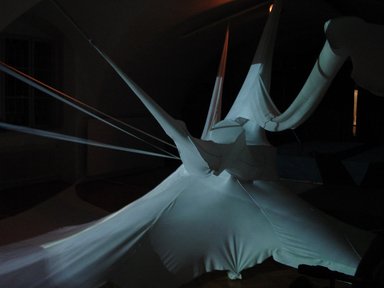

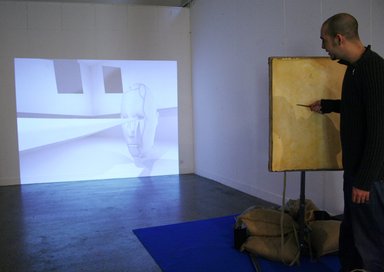

After discussing ideas and concepts, we developed an interactive scenography, based on an architecture of semi-transparent fabric and elastic materials like Lycra, that received the 2 final projections, one as the produce of a live video mix, and the second as the produce of navigation and interactions inside a 3D real time environment developed by the students. The elastic screens was integrating sensors to capture different material behaviors as bend info, or electrical contacts reacting to a custom "data glove" prepared especially for the installation.

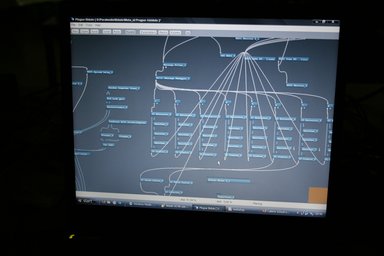

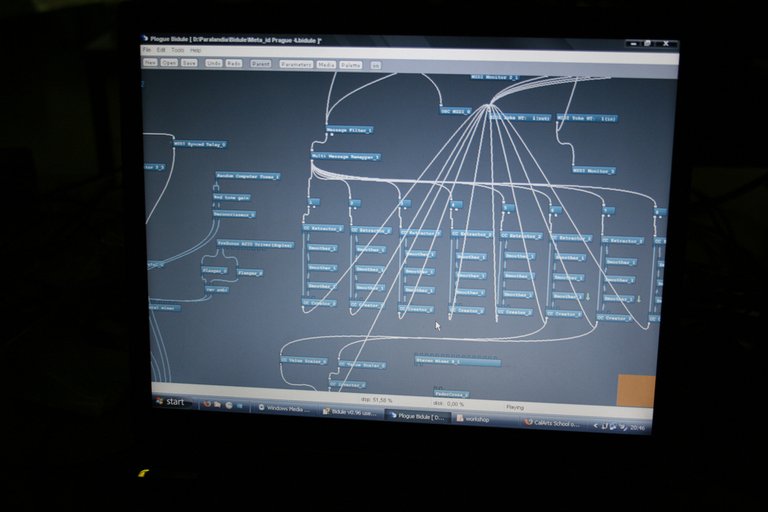

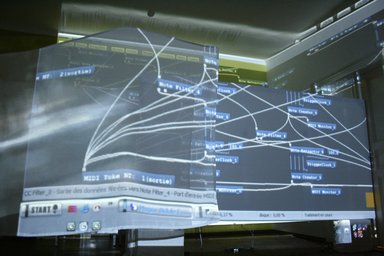

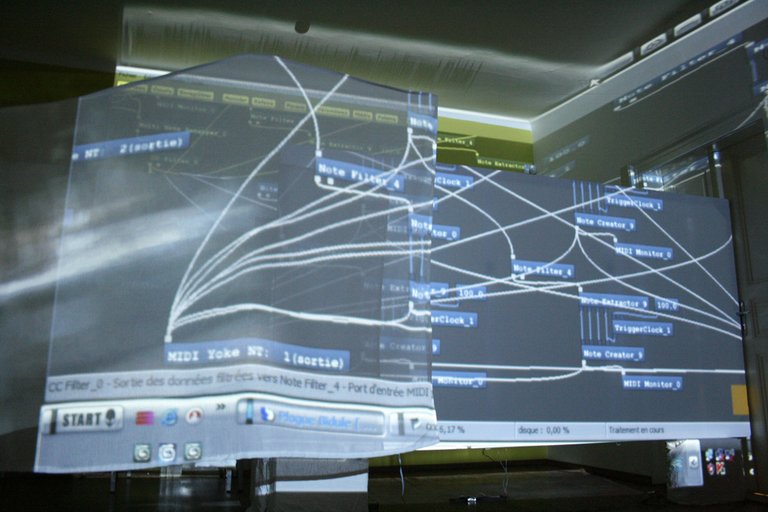

Jernej Cernalogar (SLO), Pascal Silondi (FR) and Pamela Ranfaing (FR) made lectures presenting the MIDI protocol, the network system they install, software and techniques to create live video mix, interactive soundscape design and 3D interactive environment creation. They present different type of sensors, digital or analogical, sensible to temperature, bio waves, bend, acceleration/inclination, and other triggers...They discuss the way how to connect them into a network able to receive, to threat and to send information, enabling a live interaction in between users and digital environments. They propose to develop during the workshop special devices based on students' ideas.

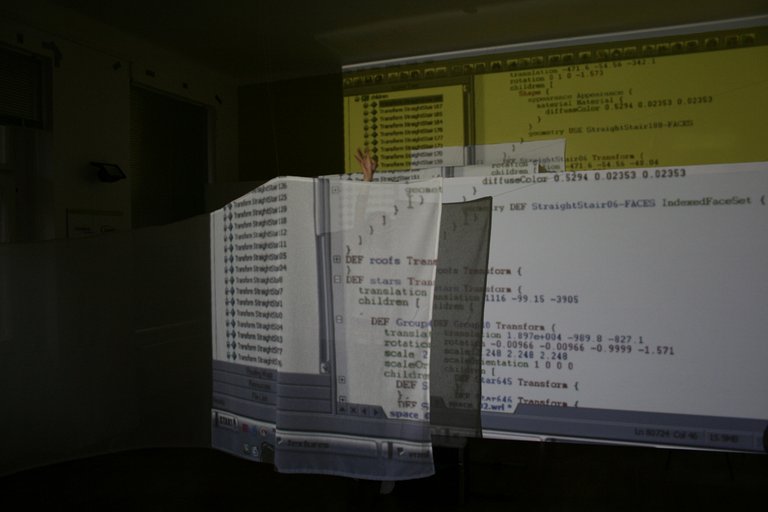

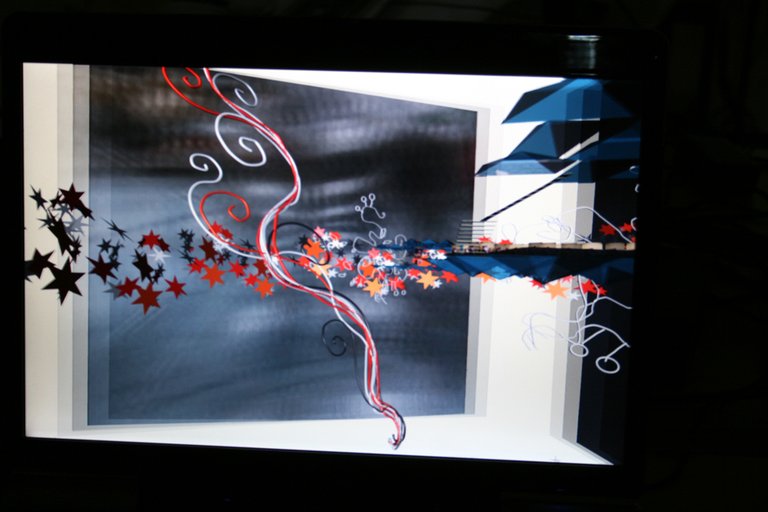

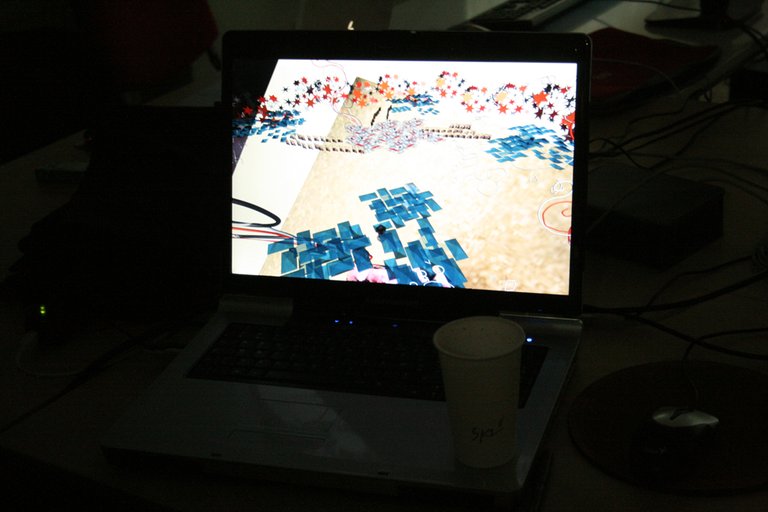

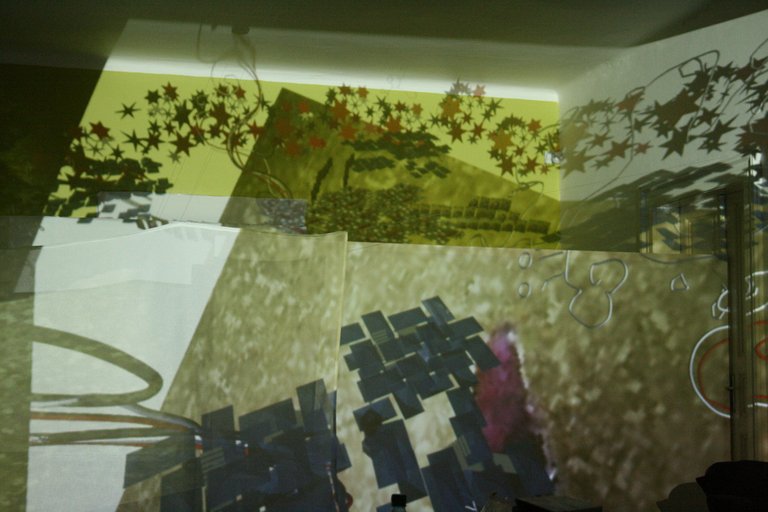

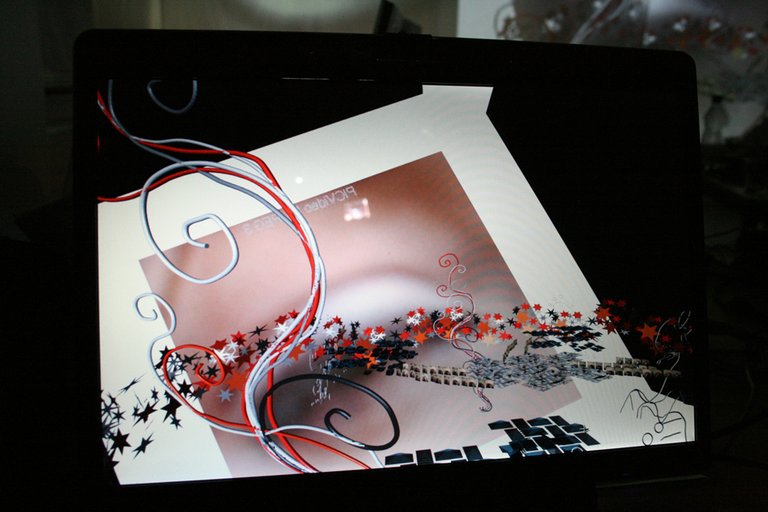

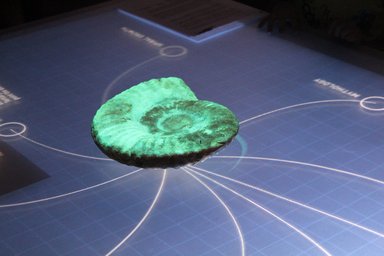

We developed a semi-transparent screen architecture more than a frontal projection, to propose to users an immersion experience. We projected 2 types of visual environments, and one interactive soundscape. The first visual environment was the produce of a live video mix based on video sequences created by students and controlled by sensors integrated in the Lycra screen. The second was the produce of the users' navigation and interaction with objects in a 3D real time environment based on VRML (Virtual Reality Modeling Language) and designed by students. That environment was presenting 3D objects and architecture fragments integrating pictures and video as textures.

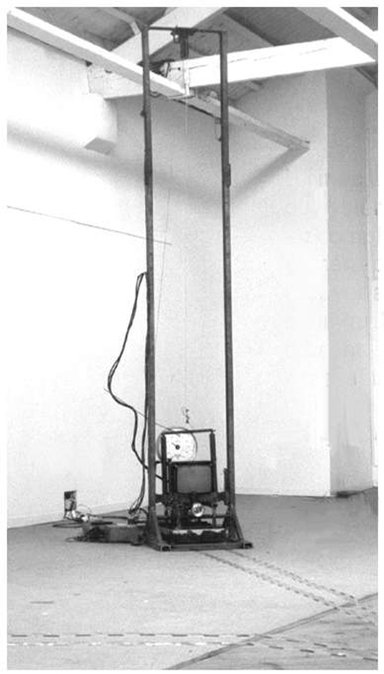

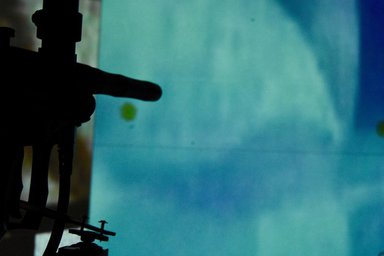

We developed experiments controlling "Servo" engines by MIDI messages provided by our sensors. We were able to control dynamically the position of the projection by moving 2 mirrors that reflected the light of the projector.

We presented different software and principles, like Bidule, Eyesweb, Pure Data for the MIDI interaction & soundscape management; 3Dsmax and the VRML (Virtual reality modeling Language) for the creation of interactive 3D environments;

Resolume for live video mix...

The Workshop was produced by Libat and leaded by :

- Pascal Silondi (FR): Artist , Director of Libat & teacher/coach of the Interactive Media department in the PragueCollege.

Scenography, interactive principles programming, haptic interface design, MIDI controllers, mechanic.

- Pamela Ranfaing (FR): Artist, General Coordinator of Libat & teacher in the Interactive Media department of the PragueCollege.

Scenography, stage design, haptic interface design.

- Jernej Cernalogar (SLO): Artist.

Scenography, soundscape creation/design and programming, haptic interface design, interactive principles programming, MIDI controllers, mechanic.

- Marie Silondi (CZ): Artist - Designer, Production Manager of Libat.

Scenography, stage design, haptic interface design.

© LIBAT 2013